Table of Contents

Introduction

This entire efforts begin when I bought my first Google Coral USB Accelerator, an affordable edge TPU device that was highly recommended among Frigate users to add object detection capabilities to Frigate without having powerful CPU/GPU.

It’s a low cost low powered device that has very little running cost, which sounds like a great deal so I bought it, paid $60 plus $20 international shipping fees.

BUT what I didn’t know is the pretrained models are giving me a tons of false positives, these models are trained with COCO datasets, which is nothing like security camera frames.

Plus, Google literally stop updating this device for a few years, and there are not many development around it anymore, many of the toolings are outdated and it’s a nightmare if you want to fine tune the pretrained model.

Initially the plan is to either fine tune SSDLite MobileDet or EfficientDet-Lite model, but then I quickly found out if I need the model to perform well I need a reasonable size of datasets for fine tuning, and after a few days wasting a ton of hours manually annotating frames from my security camera, I learn that the better practice is to use a smarter, but too large to run on edge model to help me with the annotations.

Training YOLO model for image annotations

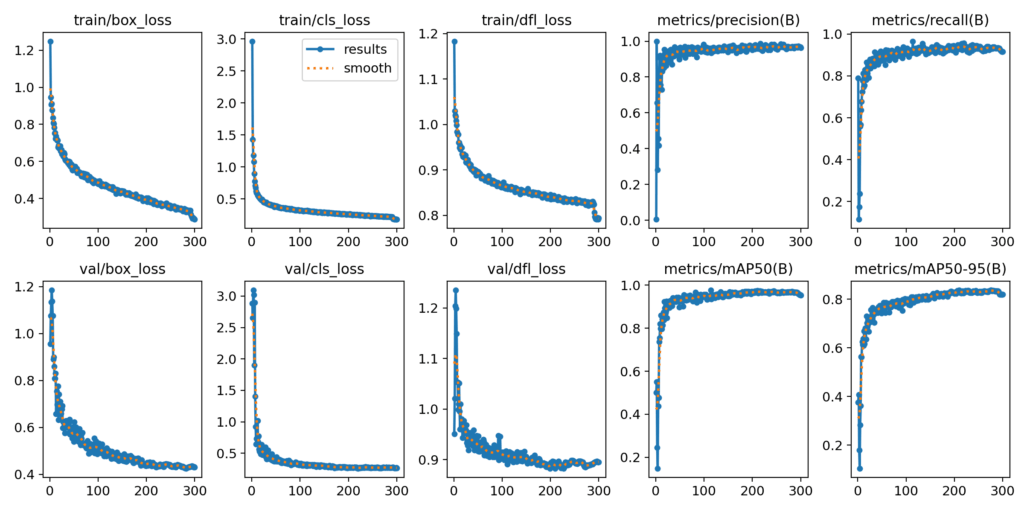

After a few days learning about YOLO, I finally started training the YOLO model with the datasets I manually prepared, then I use the trained model to annotate more images, manually reviewed them, repeat the training.

After a few iterations I finally got a close to perfect model that help me annotate my images, and because most of the annotations are correct it only take me a very little amount of time to review them, and after a few more days I finally have around 15k images to train my final model.

But the training did not go well, there’s way too many parameters where I have very little understanding on how they impact the model training, for example, learning rate, optimizer, batchnorm freezing.

Overwhelm by the parameters, there are literally tons of the thing that can go wrong, which I wasted many hours produce worse model that either have higher false positives, missing true positives, overfitting, etc.

Frustrated, then I thought why don’t I just convert the YOLO model which work so well, convert it into Tensorflow lite, then compile it for edge TPU? That would save me a ton of time if it works!

Exporting YOLO models for edge TPU

Ultralytics package itself come with an export function, where I can export my trained YOLO11n model for edge TPU, so I went ahead and try that first.

from ultralytics import YOLO

model = YOLO("/content/yolo11n-custom.pt")

model.export(format="edgetpu", imgsz=320)Now this will use the sample from COCO in the quantization process, but for now I simply want to know if it works, and as expected, it didn’t, this command simply doesn’t work, and there’s an open issue about this on their github repo.

So I spend another night letting my GPU train YOLOv8n, and this time it worked, I could export my custom trained YOLOv8n model with the same code.

from ultralytics import YOLO

model = YOLO("/content/yolov8n-custom.pt")

model.export(format="edgetpu", imgsz=320)Then I compiled the tflite for edge TPU

edgetpu_compiler -s yolov8n.tfliteI then started Frigate with this model, but guess what? It does not work, again.

Error: Got value of type UINT8 but expected type INT8

Alright this first error is pretty much self explanatory, the model expect the input to be INT8 but Frigate feed the model with UINT8 data type, so after some hours searching on github, reading similar issues, I found this dg_quantize.py from DeGirum Github repo.

This line that set the input type as UINT8 is the key solution

inf_type = tf.uint8

converter.inference_input_type = inf_typeAfter running this script I got the tflite, compiled it again with edgetpu_compiler and immediately went and try it again with Frigate.

Does it work this time? Obviously not…

IndexError: list index out of range

This is the error that I almost give up using yolo model, because it requires knowledge and skills way beyond my understandings, and it’s not something you can easily ask AI to provide a solution for.

[2025-05-22 22:15:12] frigate.detectors.plugins.edgetpu_tfl INFO : Attempting to load TPU as usb

[2025-05-22 22:15:14] frigate.detectors.plugins.edgetpu_tfl INFO : TPU found

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Process detector:coral:

Traceback (most recent call last):

File "/usr/lib/python3.11/multiprocessing/process.py", line 314, in _bootstrap

self.run()

File "/opt/frigate/frigate/util/process.py", line 41, in run_wrapper

return run(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/multiprocessing/process.py", line 108, in run

self._target(*self._args, **self._kwargs)

File "/opt/frigate/frigate/object_detection/base.py", line 136, in run_detector

detections = object_detector.detect_raw(input_frame)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/frigate/frigate/object_detection/base.py", line 86, in detect_raw

return self.detect_api.detect_raw(tensor_input=tensor_input)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/frigate/frigate/detectors/plugins/edgetpu_tfl.py", line 73, in detect_raw

class_ids = self.interpreter.tensor(self.tensor_output_details[1]["index"])()[0]

~~~~~~~~~~~~~~~~~~~~~~~~~~^^^

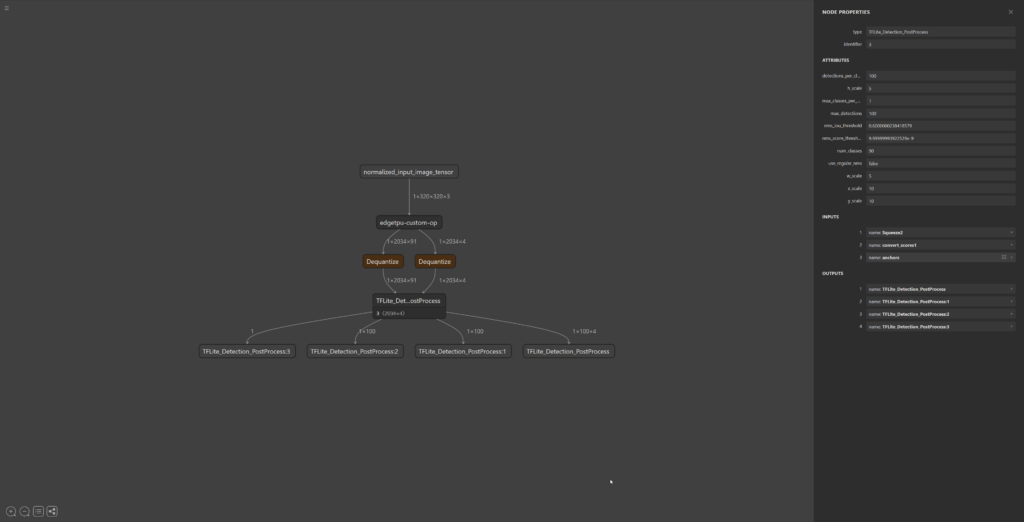

IndexError: list index out of rangeAfter spending hours trying to figure out what are the cause of this error, with the help of netron.app to visualize the model graph I realize the problem is the model output. This is the output from YOLO models:

And this is the model output of SSDLite MobileDet and other pretrained model on coral site

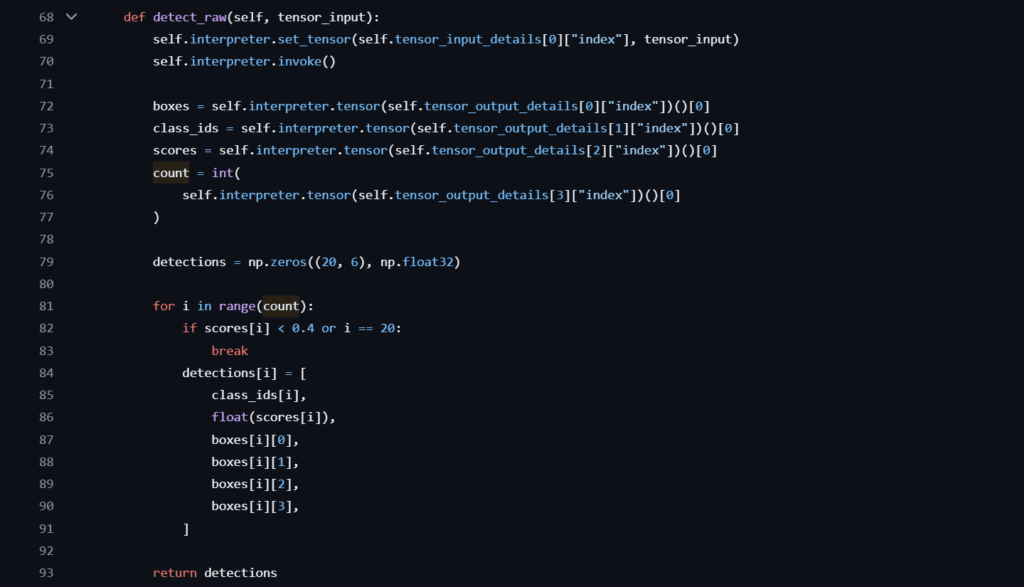

Frigate expect the post processed output, as you can see from this file edgetpu_tfl.py on their repo

I tried to search for possible solution, hoping that I can find someone had face the same issue and have a ready solution to be applied, but I found none.

From my understanding the solution to this is you either have to add post processing to the model which decode the tensor output, or you have to modify Frigate code to decode the tensor output.

I took sometime to experiment with the former option, but it quickly became too exhausting and daunting, so I decided to modify Frigate code instead to read the output properly.

Modifying Frigate edgettpu_tfl.py

There was some discussion around running YOLO model on edge TPU on Frigate github repo, and it seems there was a branch working on yolov8 support but then the branch got deleted by the author because of Ultralytics licensing concerns.

Luckily the openvino does support Yolov8 and we can see that in openvino.py it calls the function post_process_yolo from utility util/model.py, so I just ask Gemini to help me implement the same thing in edgetpu_tfl.py.

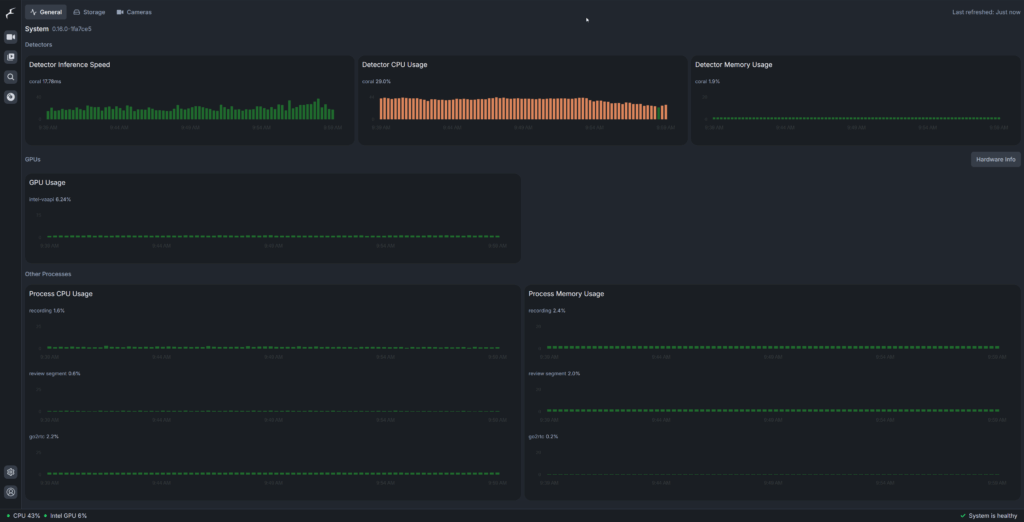

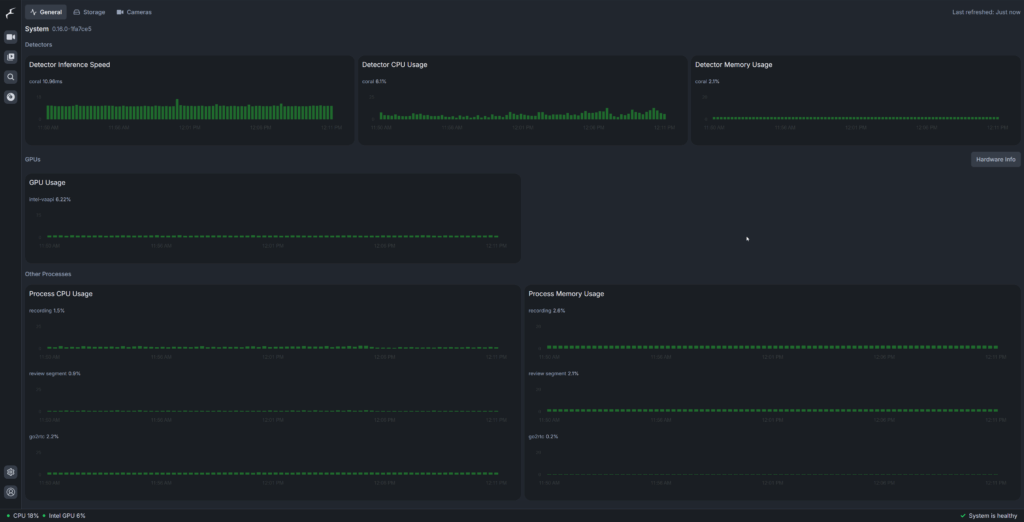

After a few retry the code is finally working and my custom trained YOLOv8n model is now running on the Coral TPU, and the inference speed is not bad either, around ~20ms, but that is probably because I only have 3 classes. (I only want to detect person, dog and car)

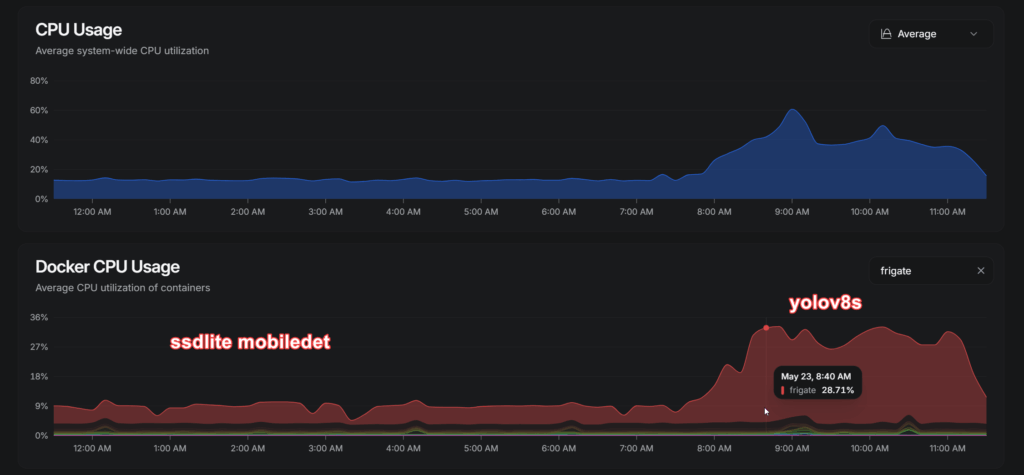

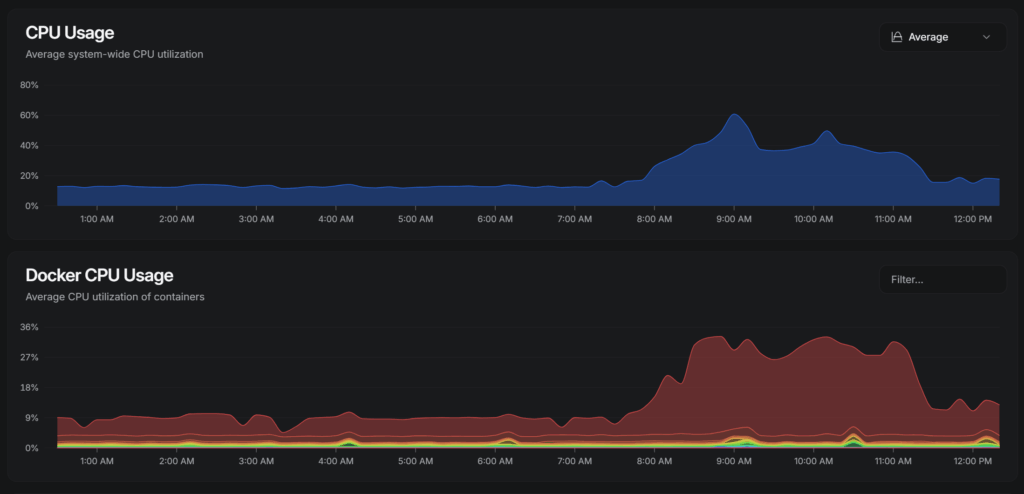

Resources usage spiked significantly

The CPU usage however is not great, it is much higher compare to running SSDLite MobileDet, and because the edgetpu_compiler wasn’t able to map all operation to TPU, some of them are running on my CPU so my overall resources usage increased significantly.

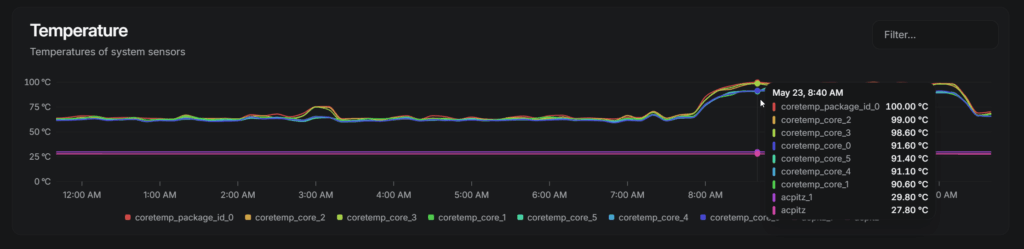

The CPU temperature also went up significantly, going from an average of ~60 celsius to ~90 celsius

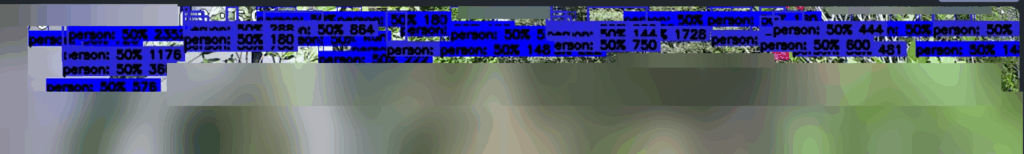

This obviously is not sustainable so I had to figure out what’s happening and when I open the debug screen in Frigate I notice there are crazy amount of bounding boxes with low confidence overlapping each other.

There is no NMS in place! Unfortunately I couldn’t figure out how to add NMS into my model export pipeline, I tried adding nms argument in export like this:

from ultralytics import YOLO

model = YOLO("/content/yolov8n_300e.pt")

model.export(format="saved_model", imgsz=320, nms=True, conf=0.6, iou=0.4, agnostic_nms=False, max_det=100)Adding NMS to the script

Then run the quantization script, but I am guessing the quantization process will quantize everything and including these NMS ops into int8, which the edgetpu_compiler couldn’t map these operations to TPU.

Number of operations that will run on Edge TPU: 4

Number of operations that will run on CPU: 541Hopefully one day I will figure out how to make this work, but for now my only solution left is to modify edgetpu_tfl.py from Frigate to implement NMS on application level with cv2.dnn.NMSBoxes, Frigate itself also use the same nms module in util/model.py

indices = cv2.dnn.NMSBoxes(boxes, scores, score_threshold=0.7, nms_threshold=0.4)Frigate hardcoded confidence score threshold to 0.4 but for my model it will still produce a lot of bounding boxes, and since my model is fine tuned I would just set a higher threshold to reduce false positives.

Final results with YOLOv8n

The inference speed has been reduce to around 10ms and lower TPU processor usage, and my CPU usage and temperature has gone back to normal, well actually slightly higher compare to using SSDLite MobileDet but let’s just hope the accuracy is worth it.

The CPU temperature has also return to normal level hovering around ~70 Celsius.

Stationary object being redetected over and over…

However there is another small bug or maybe misconfiguration, which is stationary object being detected and undetected over and over again, which if I am using record motion only it will record hour long of video for object with no movement.

But this seems to happen too when I was using the base model (ssdlite mobiledet), maybe certain settings can help to solve this issue, but I am not sure, however I think this is more on the model fault for jittery bounding boxes, in the debug view I can see the bounding box are dancing around the car parked in my driveway, Frigate probably don’t treat these as same object and thus the detection even is happening constantly.

This mean I will need to fine tuned the model better or train it all over again to make the model produce more consistent output without being over influence by the noises in the frames.

Since that will take more time, for now I will just modify Frigate configuration to make it less strict on the stationary object criteria on that camera with issues

detect:

max_disappeared: 35

stationary:

interval: 50

threshold: 10With detect fps set at 5, this will make Frigate consider object that stay still for 2 seconds as stationary, including person, and consider it disappeared if it left the frame for more than 7 seconds, probably not ideal but it’s a temporary workaround until I have a better tuned model.

YOLO11 models doesn’t work

The post processing code does not work for YOLO11 model, I am not exactly sure why, I was able to use YOLO11n exported in onnx format with openvino, and openvino use the same post processing logic for YOLOv8 and YOLO11n, so I guess the problem might be coming from my edge tpu compiling pipeline.

Maybe the edgetpu_compiler is not able to handle the new layers introduced in YOLO11, which if this is the case it explained why the Ultralytics package couldn’t export YOLO11n.pt to edgetpu format earlier.

Regardless what is the issue, YOLOv8n and YOLO11n has similar accuracy, maybe YOLO11n will be a tad bit efficient but for my use case it isn’t my top priority right now.

The modified edgetpu_tfl.py

I posted the modified edgetpu_tfl.py to my github repo in case anyone need it

https://github.com/selfhosteverything/frigate-yolo-edgetpu

It also contain the script and colab code I use to compile the edgetpu tflite file from yolov8n.pt model file. I recommend doing that in free Google colab instance, if you have issue exporting in other environment.